TensorFlow: How to embed float sequences to fixed size vectors?

I am looking methods to embed variable length sequences with float values to fixed size vectors. The input formats as following:

[f1,f2,f3,f4]->[f1,f2,f3,f4]->[f1,f2,f3,f4]-> ... -> [f1,f2,f3,f4]

[f1,f2,f3,f4]->[f1,f2,f3,f4]->[f1,f2,f3,f4]->[f1,f2,f3,f4]-> ... -> [f1,f2,f3,f4]

...

[f1,f2,f3,f4]-> ... -> ->[f1,f2,f3,f4]

Each line is a variable length sequnece, with max length 60. Each unit in one sequece is a tuple of 4 float values. I have already paded zeros to fill all sequences to the same length.

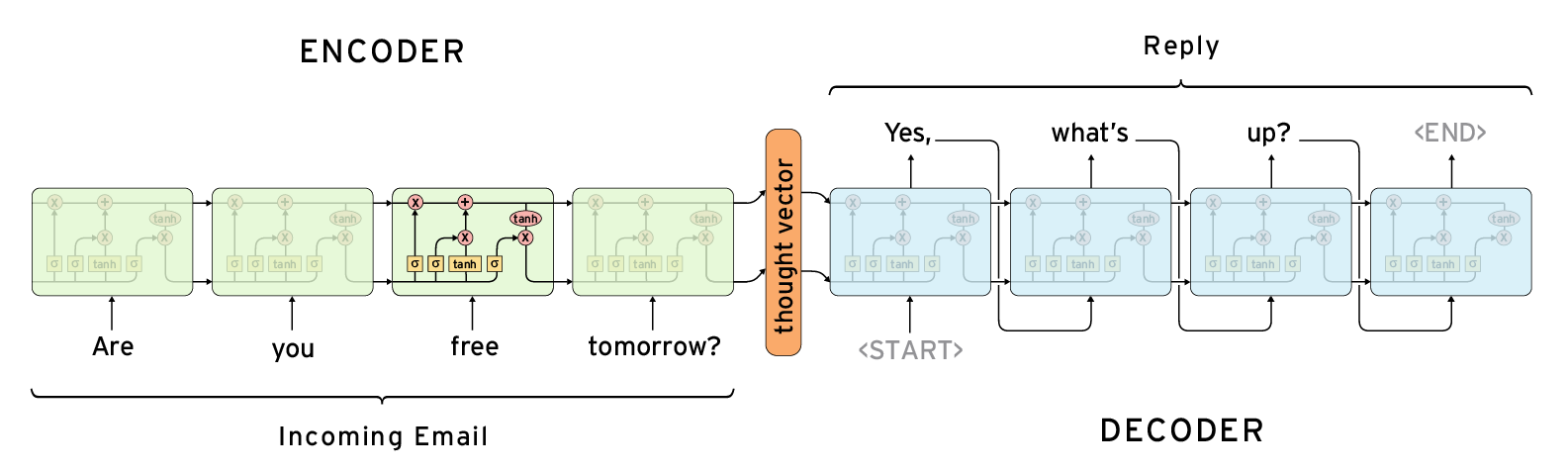

The following architecture seems solve my problem if I use the output as the same as input, I need the thought vector in the center as the embedding for the sequences.

In tensorflow, I have found tow candidate methods tf.contrib.legacy_seq2seq.basic_rnn_seq2seq and tf.contrib.legacy_seq2seq.embedding_rnn_seq2seq.

However, these tow methos seems to be used to solve NLP problem, and the input must be discrete value for words.

So, is there another functions to solve my problems?

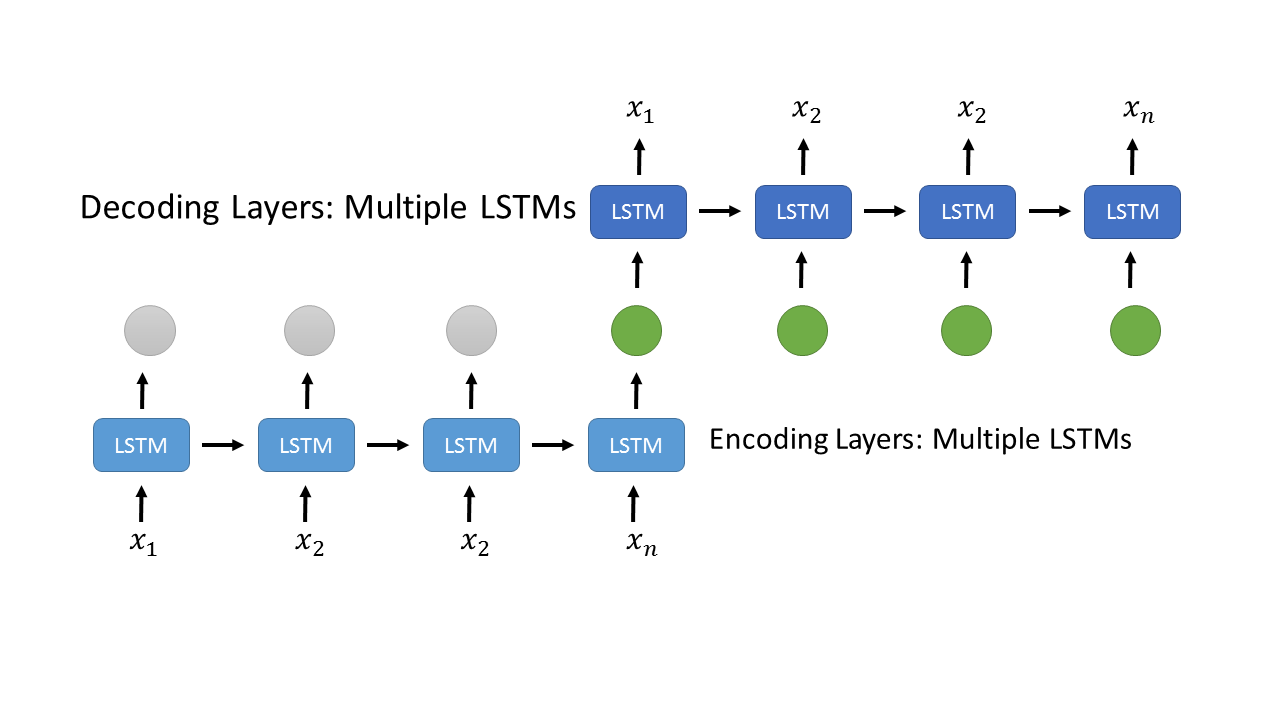

I have found a solution to my problem, using the following architecture,

,

,

The LSTMs layer below encode the series x1,x2,...,xn. The last output, the green one, is duplicated to the same count as the input for the decoding LSTM layers above. The tensorflow code is as following

series_input = tf.placeholder(tf.float32, [None, conf.max_series, conf.series_feature_num])

print("Encode input Shape", series_input.get_shape())

# encoding layer

encode_cell = tf.contrib.rnn.MultiRNNCell(

[tf.contrib.rnn.BasicLSTMCell(conf.rnn_hidden_num, reuse=False) for _ in range(conf.rnn_layer_num)]

)

encode_output, _ = tf.nn.dynamic_rnn(encode_cell, series_input, dtype=tf.float32, scope='encode')

print("Encode output Shape", encode_output.get_shape())

# last output

encode_output = tf.transpose(encode_output, [1, 0, 2])

last = tf.gather(encode_output, int(encode_output.get_shape()[0]) - 1)

# duplite the last output of the encoding layer

decoder_input = tf.stack([last for _ in range(conf.max_series)], axis=1)

print("Decoder input shape", decoder_input.get_shape())

# decoding layer

decode_cell = tf.contrib.rnn.MultiRNNCell(

[tf.contrib.rnn.BasicLSTMCell(conf.series_feature_num, reuse=False) for _ in range(conf.rnn_layer_num)]

)

decode_output, _ = tf.nn.dynamic_rnn(decode_cell, decoder_input, dtype=tf.float32, scope='decode')

print("Decode output", decode_output.get_shape())

# Loss Function

loss = tf.losses.mean_squared_error(labels=series_input, predictions=decode_output)

print("Loss", loss)

- Is there any way to get variable importance with Keras?

- Turn a tf.data.Dataset to a jax.numpy iterator

- Training a Keras model to identify leap years

- Can batch normalization be considered a linear transformation?

- Reduce inference time of object detection model by retraining with subset of original dataset

- How to save a Dataset in multiple shards using `tf.data.Dataset.save`

- why explain logit as 'unscaled log probabililty' in sotfmax_cross_entropy_with_logits?

- How to improve the performance of CNN Model for a specific Dataset? Getting Low Accuracy on both training and Testing Dataset

- InvalidArgumentError: No DNN in stream executor while training a TensorFlow RetinaNet model on Google Colab

- how to improve the accuracy of autoencoder?

- TypeError: Only integers, slices, ellipsis, tf.newaxis and scalar tf.int32/tf.int64 tensors are valid indices

- tensorflow.keras only runs correctly once

- Install Tensorflow in MacOs M1

- Could not find a version that satisfies the requirement tensorflow

- How do I use distributed DNN training in TensorFlow?

- Loading tf.keras model, ValueError: The two structures don't have the same nested structure

- Tensorflow is unable to train to predict simple multiplication

- Why does tensorflow loss go to infinity with larger training set?

- Tensorflow Probability MixtureNormal layer example not working as in example

- how to get string value out of tf.tensor which dtype is string

- How to predict list elements outside the bounds of a py dataframe?

- Load model from model.weights.h5 file stored in Azure Blob

- the number and the name of the event files in tensorflow?

- Meaning of sparse in "sparse cross entropy loss"?

- Error --accelerator unrecognized argument when launching gcloud beta ai-platform versions create API

- Change the threshold value of the keras RELU activation function

- How to implement tf.gather_nd in Pytorch with the argument batch_dims?

- Pipenv fails locking when installing TensorFlow 2.4.1

- Tensorflow dataset splitted sizing parameter problem: Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence

- Difference between "compute capability" "cuda architecture" clarification for using Tensorflow v2.3.0